Building Event-Driven Microservices with Spring Cloud Stream and Kafka

A practical guide to building scalable, resilient systems with Spring Cloud Stream and Apache Kafka

Modern applications face increasingly complex challenges in handling data and interactions between services. As our systems grow, traditional request — response patterns often become bottlenecks. This leads to seek more flexible, scalable solutions. This is where event — driven architecture (EDA) comes in. It offers a powerful way to build resilient microservices.

Why event — driven architecture?

Think of event architecture like a city’s postal system. Instead of calling each other, services send messages (events) through a central messaging system. This approach enables services to operate without relying on one another. It’s like how different postal offices operate without knowing each other’s internal workings. When one service has something important to share, it publishes an event, and any interested services can react to it in their own time.

Why Spring Cloud Stream and Kafka?

Implementing event — driven architecture might seem complex. But, Spring Cloud Stream and Apache Kafka make it easy. Spring Cloud stream makes it easy to work with message brokers. It handles most of the complex messaging infrastructure for you. Think of it as your personal event — handling assistant that knows how to talk to different messaging systems.

Apache Kafka serves as our reliable message broker. It’s like a super — efficient postal service. It can handle millions of messages, never losing one. It delivers them exactly where they need to go. Together, these technologies create a powerful foundation for building even — driven systems.

What we’ll build

To make this practical, we’ll build a real — world example: an order processing system. We’ll create two microservices:

- An order service that announces when someone place a new order.

- A notification service that listens for these announcements and sends notifications.

This example will teach you to: set up these services, manage messages and errors, and ensure your system is ready for production or close to this state. By the end of this tutorial, you’ll have a solid understanding of how to build event — driven microservices that can scale with your needs.

Ready to get started? Let’s begin by setting our development environment.

Prerequisites and local development setup

Before diving into the implementation, let’s set up our development environment. We’ll need a running Kafka instance, and the best way to get starts is to use Docker Compose for local development.

First lets create a new Spring Boot project with necessary dependencies. Add these to your pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>io.vrnsky</groupId>

<artifactId>event-driven-architecture</artifactId>

<version>0.0.1-SNAPSHOT</version>

<packaging>pom</packaging>

<modules>

<module>notification-service</module>

<module>order-service</module>

</modules>

<properties>

<java.version>17</java.version>

<spring-boot.version>3.2.0</spring-boot.version>

<spring-cloud.version>2023.0.0</spring-cloud.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-stream</artifactId>

</dependency>

<!-- Kafka Binder -->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-stream-binder-kafka</artifactId>

</dependency>

<!-- Spring Web for REST endpoints -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<!-- Lombok to reduce boilerplate -->

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

</dependencies>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>${spring-cloud.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-dependencies</artifactId>

<version>${spring-boot.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

</project>Now let’s set up our local Kafka environment. Create a docker-compose.yml file in your project root:

version: '3.8'

services:

zookeeper:

image: confluentinc/cp-zookeeper:latest

environment:

ZOOKEEPER_CLIENT_PORT: 2181

ZOOKEEPER_TICK_TIME: 2000

ports:

- "22181:2181"

kafka:

image: confluentinc/cp-kafka:latest

depends_on:

- zookeeper

ports:

- "29092:29092"

environment:

KAFKA_BROKER_ID: 1

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka:9092,PLAINTEXT_HOST://localhost:29092

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

kafka-ui:

image: provectuslabs/kafka-ui:latest

depends_on:

- kafka

ports:

- "8080:8080"

environment:

KAFKA_CLUSTERS_0_NAME: localOur development environment includes:

- A Kafka broker for message handling.

- Zookeper for Kafka cluster management.

- A web UI for monitoring Kafka

To start environment, run

docker compose up -d

Project structure

event-driven-architecture/

├── docker-compose.yml

├── pom.xml

├── order-service/

│ └── pom.xml

└── notification-service/

└── pom.xml

Implementing the Order Service

Let’s start by creating the order service. It will handle order creation and publish events to Kafka. We’ll put in the place this step by step.

First, let’s define our domain model and event objects:

package io.vrnsky.order.model;

import lombok.Data;

import java.math.BigDecimal;

import java.util.UUID;

@Data

public class Order {

private UUID id;

private String customerEmail;

private BigDecimal totalAmount;

private OrderStatus status;

}

public enum OrderStatus {

CREATED, CONFIRMED, SHIPPED, DELIVERED, CANCELLED

}

@Data

public class OrderEvent {

private UUID orderId;

private String customerEmail;

private OrderStatus status;

private String message;

private long timestamp;

}Now, let’s create our message producer using Spring Cloud Stream

package io.vrnsky.order.config;

import io.vrnsky.order.model.OrderEvent;

import lombok.RequiredArgsConstructor;

import org.springframework.cloud.stream.function.StreamBridge;

import org.springframework.kafka.support.KafkaHeaders;

import org.springframework.messaging.support.MessageBuilder;

import org.springframework.stereotype.Component;

@Component

@RequiredArgsConstructor

public class OrderEventProducer {

private final StreamBridge streamBridge;

public void sendEvent(OrderEvent orderEvent) {

streamBridge.send(

"orderEvents-out-0",

MessageBuilder

.withPayload(orderEvent)

.setHeader(KafkaHeaders.KEY, orderEvent.getOrderId().toString())

.build()

);

}

}

Let’s create the service layer to handle order operations:

package io.vrnsky.order.service;

import io.micrometer.core.instrument.Counter;

import io.micrometer.core.instrument.MeterRegistry;

import io.micrometer.core.instrument.Timer;

import io.vrnsky.order.config.OrderEventProducer;

import io.vrnsky.order.model.Order;

import io.vrnsky.order.model.OrderEvent;

import io.vrnsky.order.model.OrderStatus;

import lombok.RequiredArgsConstructor;

import org.springframework.stereotype.Service;

import java.util.HashMap;

import java.util.Map;

import java.util.UUID;

@Service

@RequiredArgsConstructor

public class OrderService {

private final Map<UUID, Order> orders = new HashMap<>();

private final OrderEventProducer orderEventProducer;

public Order createOrder(Order order) {

order.setId(UUID.randomUUID());

order.setStatus(OrderStatus.CREATED);

orders.put(order.getId(), order);

OrderEvent event = new OrderEvent();

event.setOrderId(order.getId());

event.setCustomerEmail(order.getCustomerEmail());

event.setStatus(order.getStatus());

event.setMessage("Order created successfully");

event.setTimestamp(System.currentTimeMillis());

orderEventProducer.sendEvent(event);

}

public Order getOrder(UUID orderId) {

return orders.get(orderId);

}

public Order updateOrderStatus(UUID id, OrderStatus status) {

Order order = orders.get(id);

if (order != null) {

order.setStatus(status);

OrderEvent event = new OrderEvent();

event.setOrderId(order.getId());

event.setCustomerEmail(order.getCustomerEmail());

event.setStatus(order.getStatus());

event.setMessage(String.format("Status for order with id %s was update to %s", id.toString(), status));

event.setTimestamp(System.currentTimeMillis()); }

return order;

}

}Now, let’s create the REST controller:

package io.vrnsky.order.controller;

import io.vrnsky.order.model.Order;

import io.vrnsky.order.service.OrderService;

import lombok.RequiredArgsConstructor;

import org.springframework.http.ResponseEntity;

import org.springframework.web.bind.annotation.*;

import java.util.UUID;

@RestController

@RequestMapping("/api/orders")

@RequiredArgsConstructor

public class OrderController {

private final OrderService orderService;

@PostMapping

public ResponseEntity<Order> createOrder(@RequestBody Order order) {

return ResponseEntity.ok(orderService.createOrder(order));

}

@GetMapping("/{id}")

public ResponseEntity<Order> getOrder(@PathVariable UUID id) {

Order order = orderService.getOrder(id);

return order != null ? ResponseEntity.ok(order) : ResponseEntity.notFound().build();

}

@PutMapping("/{id}/status")

public ResponseEntity<Order> updateOrderStatus(

@PathVariable UUID id,

@RequestParam OrderStatus status) {

Order updated = orderService.updateOrderStatus(id, status);

return updated != null ? ResponseEntity.ok(updated) : ResponseEntity.notFound().build();

}

}Finally, let’s configure our application:

spring:

application:

name: order-service

kafka:

properties:

spring.json.trusted.packages: io.vrnsky.*

cloud:

stream:

kafka:

binder:

brokers: localhost:29092

configuration:

key:

serializer: org.apache.kafka.common.serialization.StringSerializer

deserializer: org.apache.kafka.common.serialization.StringDeserializer

value:

serializer: org.springframework.kafka.support.serializer.JsonSerializer

deserializer: org.springframework.kafka.support.serializer.JsonDeserializer

spring.json.trusted.packages: io.vrnsky.*

bindings:

orderEvents-out-0:

destination: order-events

content-type: application/json

producer:

use-native-encoding: true

server:

port: 8081This implementation:

- Handles basic order operations (create, get, update).

- Publishes events when the order status changes.

- Uses Kafka as the message broker.

- Provide a REST API for order management.

Implementing the Notification Service

Let’s create the notification service that will consume order events and handle notifications. We’ll use the same vent model frm the order service.

First, let's put in place the consumer for order events:

package io.vrnsky.notification.messaging;

import io.vrnsky.notification.model.OrderEvent;

import lombok.extern.slf4j.Slf4j;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import java.util.function.Consumer;

@Slf4j

@Configuration

public class OrderEventConsumer {

@Bean

public Consumer<OrderEvent> handleOrderEvent() {

return event -> {

log.info("Received order event: {}", event);

switch (event.getStatus()) {

case CREATED -> sendOrderConfirmation(event);

case SHIPPED -> sendShipmentNotification(event);

case DELIVERED -> sendDeliveryConfirmation(event);

case CANCELLED -> sendCancellationNotification(event);

}

};

}

private void sendOrderConfirmation(OrderEvent event) {

log.info("Sending order confirmation email to {} for order {}",

event.getCustomerEmail(), event.getOrderId());

// Email sending logic would go here

}

private void sendShipmentNotification(OrderEvent event) {

log.info("Sending shipment notification email to {} for order {}",

event.getCustomerEmail(), event.getOrderId());

// Shipment notification logic

}

private void sendDeliveryConfirmation(OrderEvent event) {

log.info("Sending delivery confirmation email to {} for order {}",

event.getCustomerEmail(), event.getOrderId());

// Delivery confirmation logic

}

private void sendCancellationNotification(OrderEvent event) {

log.info("Sending cancellation notification email to {} for order {}",

event.getCustomerEmail(), event.getOrderId());

// Cancellation notification logic

}

}Consumer for order events

Let's create a service to handle the actual notification sending

package io.vrnsky.notification.service;

import io.vrnsky.notification.model.NotificationRequest;

import lombok.extern.slf4j.Slf4j;

import org.springframework.stereotype.Service;

@Slf4j

@Service

public class NotificationService {

public void sendEmail(NotificationRequest request) {

// In a real application, this would use an email service

// like SendGrid, Amazon SES, etc.

log.info("Sending email notification: {}", request);

}

public void sendSms(NotificationRequest request) {

// In a real application, this would use an SMS service

// like Twilio, Amazon SNS, etc.

log.info("Sending SMS notification: {}", request);

}

}

@Data

public class NotificationRequest {

private String recipient;

private String subject;

private String content;

private NotificationType type;

private Map<String, String> metadata;

}

public enum NotificationType {

EMAIL, SMS

}Notification Service implementation

Let’s add retry capability for failed notifications:

package io.vrnsky.notification.config;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.retry.RetryPolicy;

import org.springframework.retry.policy.SimpleRetryPolicy;

import org.springframework.retry.support.RetryTemplate;

@Configuration

public class RetryConfig {

@Bean

public RetryTemplate retryTemplate() {

RetryTemplate retryTemplate = new RetryTemplate();

RetryPolicy retryPolicy = new SimpleRetryPolicy(3); // Retry 3 times

retryTemplate.setRetryPolicy(retryPolicy);

return retryTemplate;

}

}Retry configuration

Finally, configure the application properties.

spring:

application:

name: notification-service

kafka:

properties:

spring.json.trusted.packages: io.vrnsky.*

cloud:

stream:

function:

definition: handleOrderEvent

bindings:

handleOrderEvent-in-0:

destination: order-events

group: notification-group

consumer:

maxAttempts: 3

backOffInitialInterval: 1000

backOffMaxInterval: 10000

backOffMultiplier: 2.0

kafka:

binder:

brokers: localhost:29092

consumer-properties:

auto.offset.reset: earliest

configuration:

key:

serializer: org.apache.kafka.common.serialization.StringSerializer

deserializer: org.apache.kafka.common.serialization.StringDeserializer

value:

serializer: org.springframework.kafka.support.serializer.JsonSerializer

deserializer: org.springframework.kafka.support.serializer.JsonDeserializer

spring.json.trusted.packages: io.vrnsky.*

server:

port: 8082

logging:

level:

io.vrnsky.notification: DEBUGThis implementation:

- Consumes order events from Kafka.

- Process different types of notifications based on order status.

- Includes retry logic for resilience.

- Simulates sending notification (with logging).

In a production environment, you would

- Integrate with real email/SMS provides.

- Add metrics and monitoring.

- Implement dead letter queues for failed messages.

- Add more sophisticated error handling.

Let’s add monitoring to both services using Spring Boot Actuator, Prometheus, and Grafna.

First, let’s add the necessary dependencies to both services’ pom.xml

<!-- Actuator for exposing metrics -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<!-- Micrometer Prometheus registry -->

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-registry-prometheus</artifactId>

</dependency>Let’s update our docker-compose.yml to include Prometheus and Grafana:

version: '3.8'

services:

# ... existing Kafka services ...

prometheus:

image: prom/prometheus:latest

ports:

- "9090:9090"

volumes:

- ./prometheus/prometheus.yml:/etc/prometheus/prometheus.yml

command:

- '--config.file=/etc/prometheus/prometheus.yml'

grafana:

image: grafana/grafana:latest

ports:

- "3000:3000"

environment:

- GF_SECURITY_ADMIN_USER=admin

- GF_SECURITY_ADMIN_PASSWORD=admin

volumes:

- ./grafana/provisioning:/etc/grafana/provisioning

depends_on:

- prometheusCreate Prometheus configuration — prometheus/prometheus.yml

global:

scrape_interval: 15s

scrape_configs:

- job_name: 'order-service'

metrics_path: '/actuator/prometheus'

static_configs:

- targets: ['host.docker.internal:8081']

- job_name: 'notification-service'

metrics_path: '/actuator/prometheus'

static_configs:

- targets: ['host.docker.internal:8082']Add custom metrics to the order service

package io.vrnsky.order.config;

import io.micrometer.core.instrument.Counter;

import io.micrometer.core.instrument.MeterRegistry;

import io.micrometer.core.instrument.Timer;

import lombok.RequiredArgsConstructor;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

@Configuration

@RequiredArgsConstructor

public class MetricsConfiguration {

private final MeterRegistry meterRegistry;

@Bean

public Counter ordersCreated() {

return Counter.builder("orders.created")

.description("Total numbers of created orders")

.register(meterRegistry);

}

@Bean

public Timer orderProcessingTime() {

return Timer.builder("order.processing.time")

.description("Time taken to process orders")

.register(meterRegistry);

}

}Metrics Configuration

And update OrderService.java

package io.vrnsky.order.service;

import io.micrometer.core.instrument.Counter;

import io.micrometer.core.instrument.MeterRegistry;

import io.micrometer.core.instrument.Timer;

import io.vrnsky.order.config.OrderEventProducer;

import io.vrnsky.order.model.Order;

import io.vrnsky.order.model.OrderEvent;

import io.vrnsky.order.model.OrderStatus;

import lombok.RequiredArgsConstructor;

import org.springframework.stereotype.Service;

import java.util.HashMap;

import java.util.Map;

import java.util.UUID;

@Service

@RequiredArgsConstructor

public class OrderService {

private final Map<UUID, Order> orders = new HashMap<>();

private final OrderEventProducer orderEventProducer;

private final Counter ordersCreated;

private final Timer orderProcessingTime;

public Order createOrder(Order order) {

return orderProcessingTime.record(() -> {

ordersCreated.increment();

order.setId(UUID.randomUUID());

order.setStatus(OrderStatus.CREATED);

orders.put(order.getId(), order);

OrderEvent event = new OrderEvent();

event.setOrderId(order.getId());

event.setCustomerEmail(order.getCustomerEmail());

event.setStatus(order.getStatus());

event.setMessage("Order created successfully");

event.setTimestamp(System.currentTimeMillis());

orderEventProducer.sendEvent(event);

return order;

});

}

public Order getOrder(UUID orderId) {

return orders.get(orderId);

}

public Order updateOrderStatus(UUID id, OrderStatus status) {

Order order = orders.get(id);

if (order != null) {

order.setStatus(status);

OrderEvent event = new OrderEvent();

event.setOrderId(order.getId());

event.setCustomerEmail(order.getCustomerEmail());

event.setStatus(order.getStatus());

event.setMessage(String.format("Status for order with id %s was update to %s", id.toString(), status));

event.setTimestamp(System.currentTimeMillis());

}

return order;

}

}Add metrics to notification service

package io.vrnsky.notification.config;

import io.micrometer.core.instrument.Counter;

import io.micrometer.core.instrument.MeterRegistry;

import lombok.RequiredArgsConstructor;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

@Configuration

@RequiredArgsConstructor

public class MetricsConfig {

private final MeterRegistry meterRegistry;

@Bean

public Counter notificationSent() {

return Counter.builder("notifications.sent")

.description("Number of notifications sent")

.register(meterRegistry);

}

@Bean

public Counter notificationFailures() {

return Counter

.builder("notifications.failures")

.register(meterRegistry);

}

}Metrics Configuration for notification service

package io.vrnsky.notification.service;

import io.micrometer.core.instrument.Counter;

import io.vrnsky.order.model.NotificationRequest;

import lombok.RequiredArgsConstructor;

import lombok.extern.slf4j.Slf4j;

import org.springframework.stereotype.Service;

@Slf4j

@Service

@RequiredArgsConstructor

public class NotificationService {

private final Counter notificationSent;

private final Counter notificationFailures;

public void sendEmail(NotificationRequest notificationRequest) {

// In a real application, this would use an email service

// like SendGrid, Amazon SES, etc.

log.info("Sending email notification: {}", notificationRequest);

try {

notificationSent.increment();

} catch (Exception e) {

notificationFailures.increment();

throw e;

}

}

public void sendSms(NotificationRequest notificationRequest) {

// In a real application, this would use an SMS service

// like Twilio, Amazon SNS, etc.

log.info("Sending sms notification: {}", notificationRequest);

try {

notificationSent.increment();

} catch (Exception e) {

notificationFailures.increment();

throw e;

}

}Updated version of NotificationService.java

Configure the Grafana dashboard. Create grafana/provisioning/dashboards/event-drivent-dashboard.json :

{

"annotations": {

"list": [

{

"builtIn": 1,

"datasource": {

"type": "grafana",

"uid": "-- Grafana --"

},

"enable": true,

"hide": true,

"iconColor": "rgba(0, 211, 255, 1)",

"name": "Annotations & Alerts",

"type": "dashboard"

}

]

},

"editable": true,

"fiscalYearStartMonth": 0,

"graphTooltip": 0,

"id": 1,

"links": [],

"panels": [

{

"datasource": {

"type": "prometheus",

"uid": "PBFA97CFB590B2093"

},

"fieldConfig": {

"defaults": {

"color": {

"mode": "palette-classic"

},

"custom": {

"axisBorderShow": false,

"axisCenteredZero": false,

"axisColorMode": "text",

"axisLabel": "",

"axisPlacement": "auto",

"barAlignment": 0,

"barWidthFactor": 0.6,

"drawStyle": "line",

"fillOpacity": 0,

"gradientMode": "none",

"hideFrom": {

"legend": false,

"tooltip": false,

"viz": false

},

"insertNulls": false,

"lineInterpolation": "linear",

"lineWidth": 1,

"pointSize": 5,

"scaleDistribution": {

"type": "linear"

},

"showPoints": "auto",

"spanNulls": false,

"stacking": {

"group": "A",

"mode": "none"

},

"thresholdsStyle": {

"mode": "off"

}

},

"mappings": [],

"thresholds": {

"mode": "absolute",

"steps": [

{

"color": "green",

"value": null

},

{

"color": "red",

"value": 80

}

]

}

},

"overrides": []

},

"gridPos": {

"h": 8,

"w": 12,

"x": 0,

"y": 0

},

"id": 4,

"options": {

"legend": {

"calcs": [],

"displayMode": "list",

"placement": "bottom",

"showLegend": true

},

"tooltip": {

"mode": "single",

"sort": "none"

}

},

"pluginVersion": "11.4.0",

"targets": [

{

"disableTextWrap": false,

"editorMode": "builder",

"expr": "notifications_failures_total",

"fullMetaSearch": false,

"includeNullMetadata": true,

"legendFormat": "__auto",

"range": true,

"refId": "A",

"useBackend": false

}

],

"title": "Notification failures",

"type": "timeseries"

},

{

"datasource": {

"type": "prometheus"

},

"fieldConfig": {

"defaults": {

"color": {

"mode": "palette-classic"

},

"custom": {

"axisBorderShow": false,

"axisCenteredZero": false,

"axisColorMode": "text",

"axisLabel": "",

"axisPlacement": "auto",

"barAlignment": 0,

"barWidthFactor": 0.6,

"drawStyle": "line",

"fillOpacity": 0,

"gradientMode": "none",

"hideFrom": {

"legend": false,

"tooltip": false,

"viz": false

},

"insertNulls": false,

"lineInterpolation": "linear",

"lineWidth": 1,

"pointSize": 5,

"scaleDistribution": {

"type": "linear"

},

"showPoints": "auto",

"spanNulls": false,

"stacking": {

"group": "A",

"mode": "none"

},

"thresholdsStyle": {

"mode": "off"

}

},

"mappings": [],

"thresholds": {

"mode": "absolute",

"steps": [

{

"color": "green",

"value": null

}

]

}

},

"overrides": []

},

"gridPos": {

"h": 8,

"w": 12,

"x": 0,

"y": 0

},

"id": 1,

"options": {

"legend": {

"calcs": [],

"displayMode": "list",

"placement": "bottom",

"showLegend": true

},

"tooltip": {

"mode": "single",

"sort": "none"

}

},

"pluginVersion": "11.4.0",

"targets": [

{

"disableTextWrap": false,

"editorMode": "builder",

"expr": "orders_created_total",

"fullMetaSearch": false,

"includeNullMetadata": true,

"legendFormat": "__auto",

"range": true,

"refId": "A",

"useBackend": false

}

],

"title": "Orders Created",

"type": "timeseries"

},

{

"datasource": {

"type": "prometheus",

"uid": "PBFA97CFB590B2093"

},

"fieldConfig": {

"defaults": {

"color": {

"mode": "palette-classic"

},

"custom": {

"axisBorderShow": false,

"axisCenteredZero": false,

"axisColorMode": "text",

"axisLabel": "",

"axisPlacement": "auto",

"barAlignment": 0,

"barWidthFactor": 0.6,

"drawStyle": "line",

"fillOpacity": 0,

"gradientMode": "none",

"hideFrom": {

"legend": false,

"tooltip": false,

"viz": false

},

"insertNulls": false,

"lineInterpolation": "linear",

"lineWidth": 1,

"pointSize": 5,

"scaleDistribution": {

"type": "linear"

},

"showPoints": "auto",

"spanNulls": false,

"stacking": {

"group": "A",

"mode": "none"

},

"thresholdsStyle": {

"mode": "off"

}

},

"mappings": [],

"thresholds": {

"mode": "absolute",

"steps": [

{

"color": "green",

"value": null

},

{

"color": "red",

"value": 80

}

]

}

},

"overrides": []

},

"gridPos": {

"h": 8,

"w": 12,

"x": 12,

"y": 0

},

"id": 2,

"options": {

"legend": {

"calcs": [],

"displayMode": "list",

"placement": "bottom",

"showLegend": true

},

"tooltip": {

"mode": "single",

"sort": "none"

}

},

"pluginVersion": "11.4.0",

"targets": [

{

"disableTextWrap": false,

"editorMode": "builder",

"expr": "order_processing_time_seconds_max",

"fullMetaSearch": false,

"includeNullMetadata": true,

"legendFormat": "__auto",

"range": true,

"refId": "A",

"useBackend": false

}

],

"title": "Order Processing Time",

"type": "timeseries"

},

{

"datasource": {

"type": "prometheus",

"uid": "PBFA97CFB590B2093"

},

"fieldConfig": {

"defaults": {

"color": {

"mode": "palette-classic"

},

"custom": {

"axisBorderShow": false,

"axisCenteredZero": false,

"axisColorMode": "text",

"axisLabel": "",

"axisPlacement": "auto",

"barAlignment": 0,

"barWidthFactor": 0.6,

"drawStyle": "line",

"fillOpacity": 0,

"gradientMode": "none",

"hideFrom": {

"legend": false,

"tooltip": false,

"viz": false

},

"insertNulls": false,

"lineInterpolation": "linear",

"lineWidth": 1,

"pointSize": 5,

"scaleDistribution": {

"type": "linear"

},

"showPoints": "auto",

"spanNulls": false,

"stacking": {

"group": "A",

"mode": "none"

},

"thresholdsStyle": {

"mode": "off"

}

},

"mappings": [],

"thresholds": {

"mode": "absolute",

"steps": [

{

"color": "green",

"value": null

},

{

"color": "red",

"value": 80

}

]

}

},

"overrides": []

},

"gridPos": {

"h": 8,

"w": 12,

"x": 0,

"y": 8

},

"id": 3,

"options": {

"legend": {

"calcs": [],

"displayMode": "list",

"placement": "bottom",

"showLegend": true

},

"tooltip": {

"mode": "single",

"sort": "none"

}

},

"pluginVersion": "11.4.0",

"targets": [

{

"disableTextWrap": false,

"editorMode": "builder",

"expr": "notifications_sent_total",

"fullMetaSearch": false,

"includeNullMetadata": true,

"legendFormat": "__auto",

"range": true,

"refId": "Total notification query",

"useBackend": false

}

],

"title": "Total notifications ",

"type": "timeseries"

}

],

"preload": false,

"refresh": "",

"schemaVersion": 40,

"tags": [],

"templating": {

"list": []

},

"time": {

"from": "now-6h",

"to": "now"

},

"timepicker": {},

"timezone": "",

"title": "Event-Driven Metrics",

"uid": "de76fj00lyk8wd",

"version": 1,

"weekStart": ""

}dashboard.yml

apiVersion: 1

providers:

- name: 'Default'

orgId: 1

folder: ''

type: file

disableDeletion: false

editable: true

options:

path: /etc/grafana/provisioning/dashboardsdatasources/datasource.yml

apiVersion: 1

datasources:

- name: Prometheus

type: prometheus

access: proxy

url: http://prometheus:9090

isDefault: trueConfigure both application to enable metrics

management:

endpoints:

web:

exposure:

include: health,metrics,prometheus

observations:

key-values:

application: ${spring.application.name}

metrics:

distribution:

percentiles-histogram:

http.server.requests: true

tracing:

sampling:

probability: 1.0Configuration of Spring Boot application for both microservices

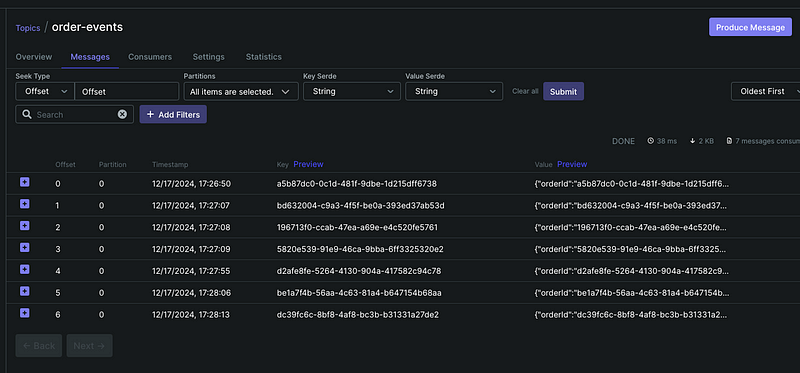

With this setup, you can:

- Watch order creation rates.

- Track notification success/failure rates.

- Track processing times.

- Track Kafka consumer lag.

Access the monitoring:

- Prometheus — http://localhost:9090

- Grafana — http://localhost:3000 (admin/admin)

Extra metrics you might want to add

- Message processing latency.

- Queue sizes.

- Error rates.

- Consumer group lag.

- System metrics (CPU, memory, etc.).

Let’s first add the alerting configuration and then move on testing.,

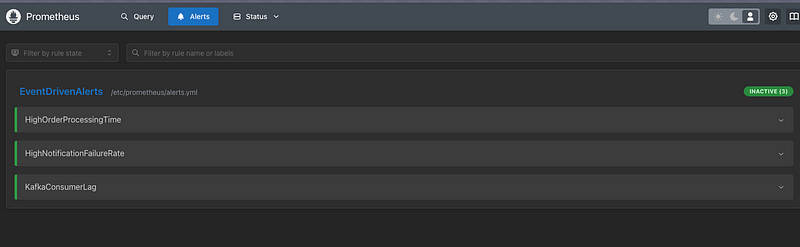

First, let’s configure alerts in Prometheus — prometheus/alerts.yml

groups:

- name: EventDrivenAlerts

rules:

# High order processing time alert

- alert: HighOrderProcessingTime

expr: rate(orders_processing_time_seconds_sum[5m]) / rate(orders_processing_time_seconds_count[5m]) > 2

for: 5m

labels:

severity: warning

annotations:

summary: High order processing time detected

description: Average order processing time is over 2 seconds for the last 5 minutes

# High notification failure rate

- alert: HighNotificationFailureRate

expr: rate(notifications_failures_total[5m]) / rate(notifications_sent_total[5m]) > 0.1

for: 5m

labels:

severity: critical

annotations:

summary: High notification failure rate

description: Notification failure rate is over 10% in the last 5 minutes

# Kafka consumer lag alert

- alert: KafkaConsumerLag

expr: kafka_consumer_group_lag > 1000

for: 5m

labels:

severity: warning

annotations:

summary: High Kafka consumer lag

description: Consumer group lag is over 1000 messagesUpdate Prometheus configuration to include alerts

global:

scrape_interval: 15s

evaluation_interval: 15s

rule_files:

- /etc/prometheus/alerts.yml

alerting:

alertmanagers:

- static_configs:

- targets:

- 'alertmanager:9093'

# ... rest of the existing configurationUpdated configuration of Prometheus

Conclusion

Building event — driven microservices requires careful consideration of many aspects. These include the basic architecture, monitoring, and production readiness. This article built a practical example. It shows how to use Spring Cloud Stream with Kafka. Together, they can create a robust, scalable system. Our work on order processing and notification services show a very small part of core patterns, but with major modifiications it can adapt to many different business scenarios.

The power of this approach lies in its flexibility and resilience. Spring Cloud Stream hides much of the complexit of using message brokers. Kafka provides the reliable backbone needed for production systems. We’re added monitoring with Prometheus and Grafana. It lets us track our system’s health and response to issues.

For those looking to take this approach to production, there are several imporant considerations to keep in mind:

- Security should be a top priority, especially for handling sensitive business data.

- Proper logging and tracing are crucial for debugging distributed systems.

- Dead letter queues help manage failed messages in a way that minimizes disruption.

- Circuit breakers protect your own system when downstream services fail.

- Backup and disaster recovery strategies need careful planning.

The patterns and practices demonstrated here serve as foundation that you can build upon. The principles are the same, whether you are handling orders, processing payments, or managing IoT device data. Decouple your services, handle failures, and track system health.